Artificial intelligence has taken center stage in nearly every part of the hiring process—from resume screening to video interviews to predictive assessments. And while the right tools can transform hiring efficiency and accuracy, the wrong ones can expose companies to ethical, legal, and reputational risk.

The challenge? Knowing the difference.

Harver’s new guide, “AI in Hiring Solutions: A Guide for Ethical Evaluation,” helps HR, TA, and compliance professionals evaluate AI-powered hiring tools through a clear and legally sound lens. If you’re navigating AI for the first time—or looking to upgrade your current hiring tech—this guide offers practical, no-nonsense advice to help you make smart, compliant, and inclusive decisions.

Here’s what’s at stake—and how the guide can help.

The Promise and Pitfalls of AI in Hiring

At its best, AI streamlines high-volume hiring, reduces bias, and matches people to roles more efficiently. But not all AI is created equal. In a market filled with opaque algorithms and “black box” hiring tools, it’s difficult to know what the technology is actually doing—or whether it’s doing it fairly.

Poorly implemented AI can introduce (or exacerbate) bias, violate privacy laws, and erode trust with candidates. It can even result in legal exposure if decisions are made using unvalidated or discriminatory criteria. That’s why it’s crucial to ask the right questions before investing in AI-based hiring software.

How Harver’s AI Evaluation Guide Can Help

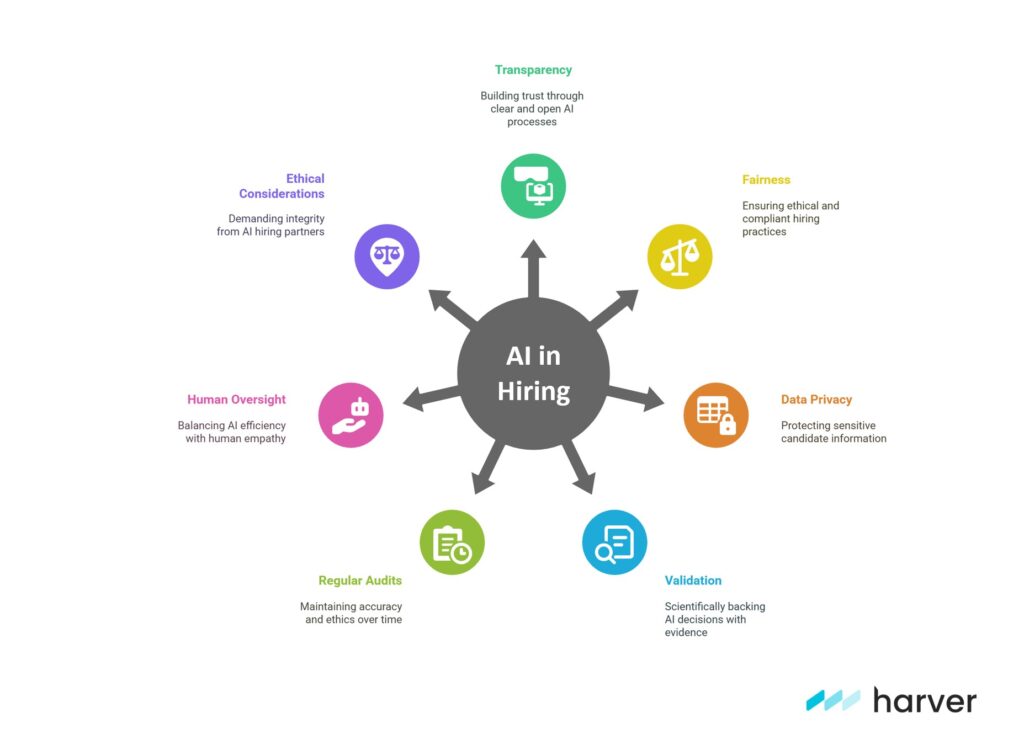

Harver’s guide walks you through seven key areas to consider when evaluating AI tools. It’s not a sales pitch—it’s a practical framework based on decades of science and experience working with top employers around the world.

1. Transparency & Explainability

Transparency is the foundation of trust.

2. Bias & Fairness

Ensuring fairness isn’t just ethical—it’s good business and a compliance necessity.

3. Data Privacy & Security

Because AI tools process sensitive candidate data, vet compliance with privacy laws and protections.

4. Validation & Reliability

Decisions and assessments are scientifically validated and backed by empirical evidence, not guesswork.

5. Regular Audits & Updates

Routine reviews are essential – keep models accurate over time for ethics and precision.

6. Human Oversight & Candidate Experience

The ideal approach involves achieving a balance of tech efficiency and human empathy.

7. Ethical Considerations

Companies should demand not just functionality, but integrity from their hiring partners.

Why This Guide Matters Now

Regulators are increasingly scrutinizing the use of AI in hiring. Candidates are more attuned than ever to issues of fairness and transparency. And organizations are under pressure to meet ambitious DEI goals while maintaining speed and scale.

Harver’s AI Buyer’s Guide arms you with essential questions to ask vendors—so you can identify red flags, compare options confidently, and select a solution that aligns with your goals and values.

Harver’s Approach to AI

Harver is committed to using AI ethically and responsibly. We combine the best of behavioral science, validated assessments, and thoughtfully applied AI to create ethical, data-driven hiring processes that scale.

Rather than replacing human judgment, Harver empowers talent teams with tools that surface real, job-relevant insights—so you can make faster, smarter decisions with confidence. It’s AI with accountability. Automation with intention. And a commitment to candidate experience and compliance built into every click.

In short: Harver doesn’t use AI to cut corners—we use it to elevate your process and align every decision with science, strategy, and scale.

If you’re exploring AI hiring tools—or want to make sure your current provider measures up—“AI in Hiring Solutions: A Guide for Ethical Evaluation” is the resource you need.

Let’s make hiring smarter, safer, and more human—with AI that works for people, not around them.