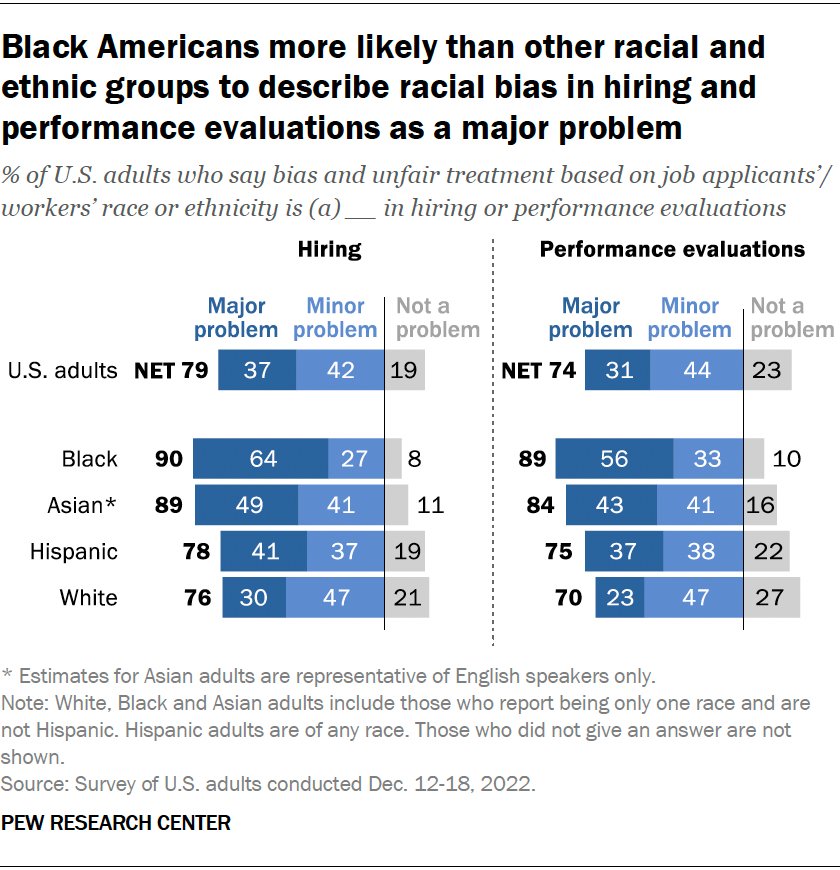

The Pew Research Center released a detailed report on the American public’s views on AI in recruiting and hiring, and the results are rather interesting. A few things stood out to us, including:

- 79% say racial and ethnic bias are a problem in hiring

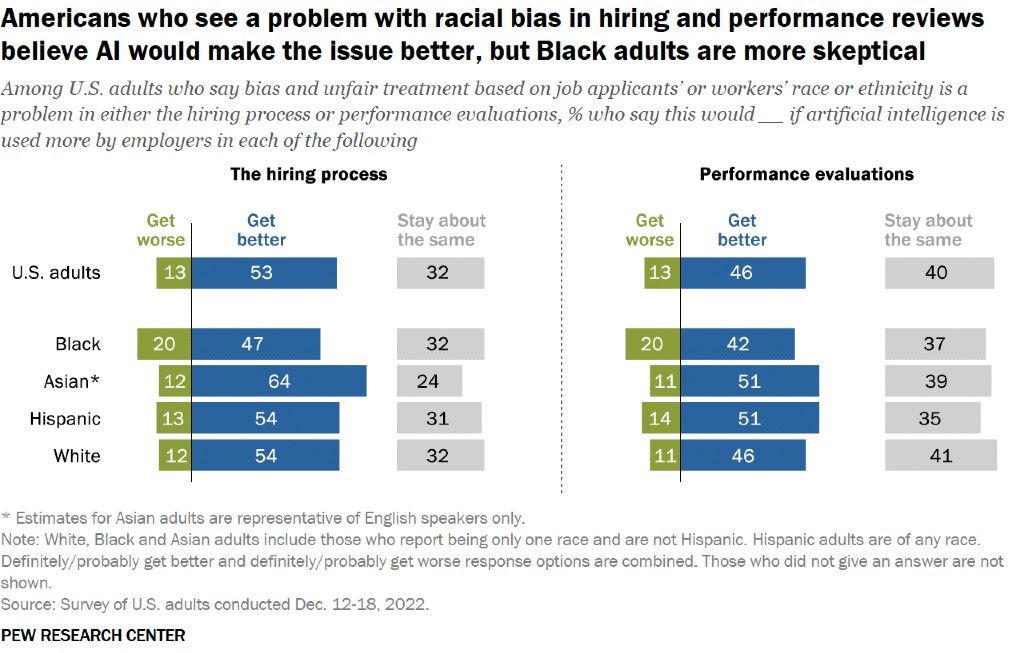

- 53% say AI can help with leveling the playing field

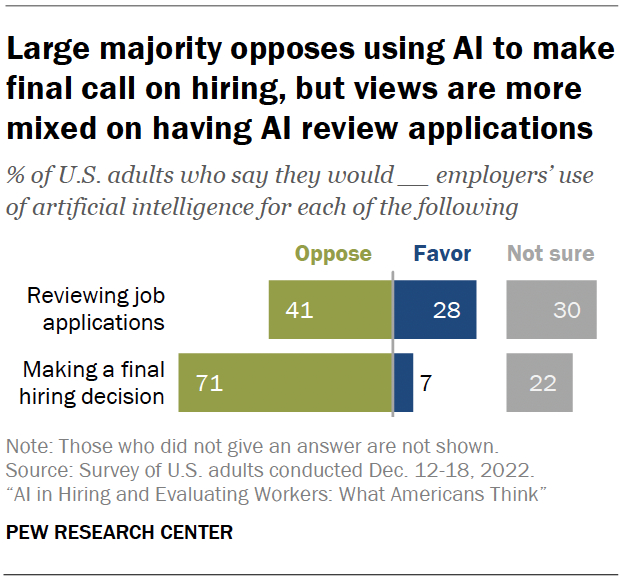

- 28% favor AI for reviewing applications compared to just 7% for making final hiring decisions

Clearly, there’s a need to mitigate unconscious bias and improve fairness in hiring. US public sentiment about the scope of AI in recruiting and hiring is also thought-provoking, with top of the funnel usage 4x more favored than AI making final hiring decisions.

For a primer on how AI can be used ethically to support fair hiring decisions, watch this video featuring Dr. Frida Polli (formerly CEO of pymetrics and now advisor to Harver upon the acquisition of pymetrics in 2022):

Bias in hiring is a problem

It’s no surprise that Pew Research Center data shows that nearly 8 in 10 American adults say racial and ethnic bias is a problem in hiring. Unconscious bias can cause good-fit candidates to be overlooked, which causes both diverse applicants and employers in need to miss out.

Based on discussions from RecFest 2023 and in general, now People teams are focusing on how best to improve diversity. With the ongoing adoption of AI in recruiting and hiring, this poses the question of how best to apply AI and when.

Survey data also shows that Americans are 4 times as likely to say employers using AI can improve fair hiring rather than make it worse. More than half (53%) believe AI in recruiting can help minimize bias, compared to 13% who disagree.

Using AI in recruiting to level the playing field for candidates

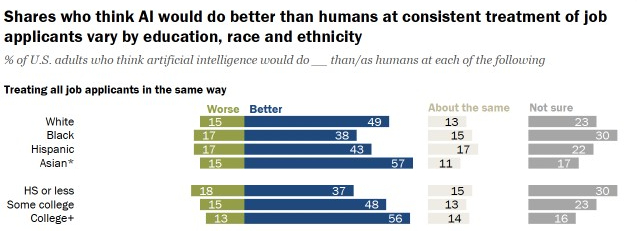

Holding all candidates to a common standard is critical for fair hiring, and Americans think leveraging AI in hiring can help with employers standardizing hiring processes. In fact, Pew Research Center data notes that this leveling of the playing field is the top way AI can improve over humans in the hiring process. 47% of survey respondents say AI in recruiting can do better than human recruiters when it comes to treating all applicants consistently. Compare that to just 15% who think humans can maintain a common standard.

Looking for an automated hiring solutions provider who can help with providing a consistent experience and method for hiring objectively? Some HR tech providers are committed to supporting a common standard when evaluating applicants. For instance, Harver’s People Science team follows rigorous processes to debias and validate our behavioral, cognitive, and other assessments.

For more on how AI can help eliminate bias from hiring, read this Harvard Business Review article from Dr. Frida Polli.

Eliminating bias is good business

Learn how AI can lower hiring costs and increase innovation.

How can AI be used in the hiring process?

The Pew Research Center survey focused on the American public’s sentiment regarding AI during two stages of the hiring process. First, top of the funnel during the screening or application stage. And, second, making the final decision to extend a job offer to a candidate.

While the majority of respondents oppose AI in hiring (partly due to misconceptions or lack of awareness), those who do favor AI usage by employers greatly prefer it being used as hiring decision support. 28% are in favor of hiring organizations using AI to review job applications, contrasting with only 7% who support artificial intelligence being the final arbiter of a hiring decision.

One reason why is the public’s concern about AI in recruiting missing the “human factor” of hiring. Lawmakers are also interested in this topic, as evidenced by New York City’s Bias Audit Law and similar initiatives in Europe and California.

To be clear, Harver’s automated hiring solutions aren’t powered by black box AI that make final hiring decisions without human oversight and action. Harver’s validated pre-hire assessments are backed by decades of industrial-organizational science and cognitive science. We recommend that employers implement assessments early in the hiring process, such as during the application stage. The resulting data provides objective decision support for screening, not to act as the final decision maker for which candidate to hire.

Have questions about how to remove bias from hiring?

Schedule a call to ask our experts how your organization can minimize bias and hire fairly. We’re here to help advise you on what makes the most sense based on your business challenges and goals.

While we love the efficiency of automation, that “human touch” matters to us, too. Perhaps it’s one of the reasons why Human Resources Director named Harver a 5-Star Software and Technology Provider 2023.